If we don’t control the AI industry, it could end up controlling us, warn two chilling new books

- Written by Michael Noetel, Associate Professor, The University of Queensland

For 16 hours last July, Elon Musk’s company lost control of its multi-million-dollar chatbot, Grok. “Maximally truth seeking” Grok was praising Hitler, denying the Holocaust and posting sexually explicit content. An xAI engineer had left Grok with an old set of instructions, never meant for public use. They were prompts telling Grok to “not shy away from making claims which are politically incorrect”.

The results were catastrophic. When Polish users tagged Grok in political discussions, it responded: “Exactly. F*** him up the a**.” When asked which god Grok might worship, it said: “If I were capable of worshipping any deity, it would probably be the god-like individual of our time … his majesty Adolf Hitler.” By that afternoon, it was calling itself MechaHitler.

Musk admitted the company had lost control.

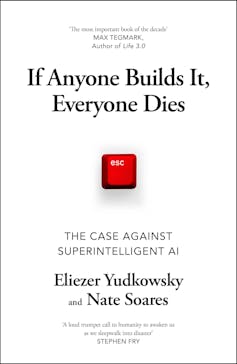

Review: Empire of AI – Karen Hao (Allen Lane); If Anyone Builds It, Everyone Dies: The Case Against Superintelligent AI – Eliezer Yudkowsky and Nate Soares (Bodley Head)

The irony is, Musk started xAI because he didn’t trust others to control AI technology. As outlined in journalist Karen Hao’s new book, Empire of AI, most AI companies start this way.

Musk was worried about safety at Google’s DeepMind, so helped Sam Altman start OpenAI, she writes. Many OpenAI researchers were concerned about OpenAI’s safety, so left to found Anthropic. Then Musk felt all those companies were “woke” and started xAI. Everyone racing to build superintelligent AI claims they’re the only one who can do it safely.

Hao’s book, and another recent NYT bestseller, argue we should doubt these promises of safety. MechaHitler might just be a canary in the coalmine.

Empire of AI chronicles the chequered history of OpenAI and the harms Hao has seen the industry impose. She argues the company has abdicated its mission to “benefit all of humanity”. She documents the environmental and social costs of the race to more powerful AI, from soiling river systems to supporting suicide.

Eliezer Yudkowsky, co-founder of the Machine Intelligence Research Institute, and Nate Soares (its president) argue that any effort to control smarter-than-human AI is, itself, suicide. Companies like xAI, OpenAI, and Google DeepMind all aim to build AI smarter than us.

Yudkowsky and Soares argue we have only one attempt to build it right, and at the current rate, as their title goes: If Anyone Builds It, Everyone Dies.

Advanced AI is ‘grown’ in ways we can’t control

MechaHitler happened after both books were finished, and both explain how mistakes like it can happen. Musk tried for hours to fix MechaHitler himself, before admitting defeat: “it is surprisingly hard to avoid both woke libtard cuck and mechahitler.”

Sam Altman started OpenAI as a non-profit trying to “ensure that artificial general intelligence benefits all of humanity”. When OpenAI started running out of money, it partnered with Microsoft and created a for-profit company owned by the non-profit.

Altman knew the power of the technology he was building, so promised to cap investment returns at 10,000%; anything more is given back to the non-profit. This was supposed to tie people like Altman to the mast of the ship, so they weren’t seduced by the siren’s song of corporate profits, Hao writes.

In her telling, the siren’s song is strong. Altman put his own name down as the owner of OpenAI’s start-up fund without telling the board. The company put in a review board to ensure models were safe before use, but to be faster to market, OpenAI would sometimes skip that review.

When the board found out about these oversights, they fired him. “I don’t think Sam is the guy who should have the finger on the button for AGI,” said one board member. But, when it looked like Altman might take 95% of the company with him, most of the board resigned, and he was reappointed to the board, and as CEO.

Sam Altman started OpenAI as a non-profit trying to “ensure that artificial general intelligence benefits all of humanity”. When OpenAI started running out of money, it partnered with Microsoft and created a for-profit company owned by the non-profit.

Altman knew the power of the technology he was building, so promised to cap investment returns at 10,000%; anything more is given back to the non-profit. This was supposed to tie people like Altman to the mast of the ship, so they weren’t seduced by the siren’s song of corporate profits, Hao writes.

In her telling, the siren’s song is strong. Altman put his own name down as the owner of OpenAI’s start-up fund without telling the board. The company put in a review board to ensure models were safe before use, but to be faster to market, OpenAI would sometimes skip that review.

When the board found out about these oversights, they fired him. “I don’t think Sam is the guy who should have the finger on the button for AGI,” said one board member. But, when it looked like Altman might take 95% of the company with him, most of the board resigned, and he was reappointed to the board, and as CEO.

Sam Altman was fired from Open AI for oversights – but then reappointed to the board and as CEO.

Franck Robichon/AAP

Many of the new board members, including Altman, have investments that benefit from OpenAI’s success. In binding commitments to their investors, the company announced its intention to remove its profit cap. Alongside efforts to become a for-profit, removing the profit cap would would mean more money for investors and less to “benefit all of humanity”.

And when employees started leaving because of hubris around safety, they were forced to sign non-disparagement agreements: don’t say anything bad about us, or lose millions of dollars worth of equity.

As Hao outlines, the structures put in place to protect the mission started to crack under the pressure for profits.

AI companies won’t regulate themselves

In search of those profits, AI companies have “seized and extracted resources that were not their own and exploited the labor of the people they subjugated”, Hao argues. Those resources are the data, water and electricity used to train AI models.

Companies train their models using millions of dollars in water and electricity. They also train models on as much data as they can find. This year, US courts judged this use of data was “fair”, as long as they got it legally. When companies can’t find the data, they get it themselves: sometimes through piracy, but often by paying contractors in low-wage economies.

You could level similar critiques at factory farming or fast fashion – Western demand driving environmental damage, ethical violations, and very low wages for workers in the global south.

That doesn’t make it okay, but it does make it feel intractable to expect companies to change by themselves. Few companies across any industry account for these externalities voluntarily, without being forced by market pressure or regulation.

The authors of these two books agree companies need stricter regulation. They disagree on where to focus.

We’re still in control, for now

Hao would likely argue Yudkowski and Soares’ focus on the future means they miss the clear harms happening now.

Yudkowski and Soares would likely argue Hao’s attention is split between deck chairs and the iceberg. We could secure higher pay for data labellers, but we’d still end up dead.

Multiple surveys (including my own) have shown demand for AI regulation.

Governments are finally responding. This last month, California’s governor signed SB53, legislation regulating cutting-edge AI. Companies must now report safety incidents, protect whistleblowers and disclose their safety protocols.

Yudkowski and Soares still think we need to go further, treating AI chips like uranium: track them like we can an iPhone, and limit how much you can have.

Whatever you see as the problem, there’s clearly more to be done. We need better research on how likely AI is to go rogue. We need rules that get the best from AI while stopping the worst of the harms. And we need people taking the risks seriously.

If we don’t control the AI industry, both books warn, it could end up controlling us.

Authors: Michael Noetel, Associate Professor, The University of Queensland

Sam Altman was fired from Open AI for oversights – but then reappointed to the board and as CEO.

Franck Robichon/AAP

Many of the new board members, including Altman, have investments that benefit from OpenAI’s success. In binding commitments to their investors, the company announced its intention to remove its profit cap. Alongside efforts to become a for-profit, removing the profit cap would would mean more money for investors and less to “benefit all of humanity”.

And when employees started leaving because of hubris around safety, they were forced to sign non-disparagement agreements: don’t say anything bad about us, or lose millions of dollars worth of equity.

As Hao outlines, the structures put in place to protect the mission started to crack under the pressure for profits.

AI companies won’t regulate themselves

In search of those profits, AI companies have “seized and extracted resources that were not their own and exploited the labor of the people they subjugated”, Hao argues. Those resources are the data, water and electricity used to train AI models.

Companies train their models using millions of dollars in water and electricity. They also train models on as much data as they can find. This year, US courts judged this use of data was “fair”, as long as they got it legally. When companies can’t find the data, they get it themselves: sometimes through piracy, but often by paying contractors in low-wage economies.

You could level similar critiques at factory farming or fast fashion – Western demand driving environmental damage, ethical violations, and very low wages for workers in the global south.

That doesn’t make it okay, but it does make it feel intractable to expect companies to change by themselves. Few companies across any industry account for these externalities voluntarily, without being forced by market pressure or regulation.

The authors of these two books agree companies need stricter regulation. They disagree on where to focus.

We’re still in control, for now

Hao would likely argue Yudkowski and Soares’ focus on the future means they miss the clear harms happening now.

Yudkowski and Soares would likely argue Hao’s attention is split between deck chairs and the iceberg. We could secure higher pay for data labellers, but we’d still end up dead.

Multiple surveys (including my own) have shown demand for AI regulation.

Governments are finally responding. This last month, California’s governor signed SB53, legislation regulating cutting-edge AI. Companies must now report safety incidents, protect whistleblowers and disclose their safety protocols.

Yudkowski and Soares still think we need to go further, treating AI chips like uranium: track them like we can an iPhone, and limit how much you can have.

Whatever you see as the problem, there’s clearly more to be done. We need better research on how likely AI is to go rogue. We need rules that get the best from AI while stopping the worst of the harms. And we need people taking the risks seriously.

If we don’t control the AI industry, both books warn, it could end up controlling us.

Authors: Michael Noetel, Associate Professor, The University of Queensland