After defamation ruling, it's time Facebook provided better moderation tools

- Written by Fiona R Martin, Senior Lecturer in Convergent and Online Media, University of Sydney

After a NSW Supreme court judge ruled this week that Australian publishers are liable for defamatory comments on their Facebook sites, it’s clear that page owners need to get serious about social media management.

The ruling suggests that operators of commercial Facebook pages may need to hide all comments by default so that they can be checked for defamation before they are seen by the public.

The problem is that Facebook was not designed for users to pre-moderate comments. It was built to deliver us “frictionless”, immediate communication.

Judge Stephen Rothman’s contentious ruling relied on expert evidence that Facebook publishers can use a software hack to block comments from appearing. By adding common words like pronouns or “the” and “a” to their page moderation filter, owners can ostensibly hide then un-hide comments once they’ve been checked for illegal content.

This is obviously impractical. Hiding comments will stop many people from posting, because they won’t see their contributions appear as they do elsewhere on Facebook. It will also stymie conversations, unless companies hire 24/7 moderation services. The cost would be prohibitive for most businesses.

An appeal against the decision is likely. In the meantime, companies with a Facebook presence should be doing two things:

- hiring expert community managers

- lobbying platforms for better moderation tools.

Better moderation tools might include introducing moderation dashboards and audit trails, giving users the chance to pre-moderate all comments, the ability to edit and move comments, and easier means of managing suspended and banned users.

Why we need professionals

Community managers are a new and highly educated sector of the digital workforce. They build and facilitate online groups, as well as oversee moderation.

The results of the Australian Community Manager’s (ACM) network annual survey released this week show that this is a smart and highly feminised workforce. 82% have graduate qualifications and 72% are women.

Oddly, while most companies now use Facebook for customer engagement and promotion, many don’t yet employ senior professionals to oversee their community building.

Many employers and recruiters still see social media and online community manager roles as “junior and low skilled”. This is despite the fact that these professionals are often on the front line of client relations. They manage complex social infrastructure and handle tricky publishing decisions – such as what comments are legal, and which ones could lead to toxic conversations.

Venessa Paech, an expert in community management, addresses SWARM, the Australian Community Managers conference.

Author provided

Venessa Paech, an expert in community management, addresses SWARM, the Australian Community Managers conference.

Author provided

Only 22% of community professionals surveyed by the ACM said their role is understood and valued by the organisations they work with.

In light of the Rothman ruling, ACM founder and convenor Venessa Paech says:

…now more than ever companies need to recognise the value of having professionals manage their communities and social media sites, and planning ways to discourage the posting of harmful content.

Read more: Facebook is all for community, but what kind of community is it building?

Facebook’s platforms are popular

The ACM survey looked closely at the types of platforms managers businesses are using to host online groups. It found Facebook hosted the most used non-specialist applications for building community:

- Groups

- Workplace.

Facebook Groups, the platform’s initial venture into community software has increased in popularity from 20% to 23% since last year’s survey. Facebook Workplace, its move into enterprise online community, grew 10% in the same period.

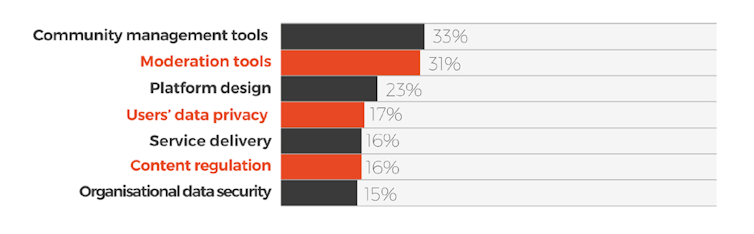

The ACM revealed that the issues community managers most wanted to discuss with their platform hosts were better management and moderation tools.

Top issues community managers most want to discuss with their platform provider

ACM Career Survey 2019

As one respondent said:

I run a project that is often impacted by hate speech and online harassment. I would love to have a closer relationship with the platform provider’s staff to ensure they support us and share insight into how content and comment moderation is evolving on their end.

That brings us to the second step Australian publishers need to take to tackle illegal speech online – lobbying Facebook and other platforms for effective community management and moderation tools.

Read more:

We need to talk about the mental health of content moderators

Better moderation tools are essential

The Rothman ruling, unworkable as it is, is just the latest indication of how the judiciary and governments worldwide are bent on regulating platforms to control illegal speech.

As digital law scholar Nic Suzor has noted, there is an increasing sense that technology companies do not have our consent to govern the social world in the way they have been for the past decade.

The question then is whether it’s more effective for regulators to work with platforms to devise better moderation approaches, than to unilaterally punish them, or their users (such as media outlets), for breaches of speech laws.

While Rothman’s ruling does the former, Scott Morrison’s recent Sharing of Abhorrent Violent Materials Act does the latter. And yet there is no guarantee that either is a solution to preventing harmful content from being posted and spread.

Instead, as UN Secretary-General António Guterres said on Wednesday, in order to tackle the “tsunami of hatred” that is gathering speed across the world online it’s necessary to work with the platforms towards solutions.

So if you’re a Facebook publisher the time is ripe to reach out, to tell the platform what you need to keep your community safe and successful. And if you don’t know where to start, hire an online community manager to help.

ACM Career Survey 2019

As one respondent said:

I run a project that is often impacted by hate speech and online harassment. I would love to have a closer relationship with the platform provider’s staff to ensure they support us and share insight into how content and comment moderation is evolving on their end.

That brings us to the second step Australian publishers need to take to tackle illegal speech online – lobbying Facebook and other platforms for effective community management and moderation tools.

Read more:

We need to talk about the mental health of content moderators

Better moderation tools are essential

The Rothman ruling, unworkable as it is, is just the latest indication of how the judiciary and governments worldwide are bent on regulating platforms to control illegal speech.

As digital law scholar Nic Suzor has noted, there is an increasing sense that technology companies do not have our consent to govern the social world in the way they have been for the past decade.

The question then is whether it’s more effective for regulators to work with platforms to devise better moderation approaches, than to unilaterally punish them, or their users (such as media outlets), for breaches of speech laws.

While Rothman’s ruling does the former, Scott Morrison’s recent Sharing of Abhorrent Violent Materials Act does the latter. And yet there is no guarantee that either is a solution to preventing harmful content from being posted and spread.

Instead, as UN Secretary-General António Guterres said on Wednesday, in order to tackle the “tsunami of hatred” that is gathering speed across the world online it’s necessary to work with the platforms towards solutions.

So if you’re a Facebook publisher the time is ripe to reach out, to tell the platform what you need to keep your community safe and successful. And if you don’t know where to start, hire an online community manager to help.

Authors: Fiona R Martin, Senior Lecturer in Convergent and Online Media, University of Sydney